Common scenario: your organic sessions are down, your Google Search Console (GSC) rankings look steady, but competitors who historically didn't rank for your queries are appearing inside AI “overview” boxes (ChatGPT, Claude, Perplexity). Finance and leadership are asking for hard ROI. The pressure devastates . Yet there are concrete diagnostics and experiments that will produce defensible attribution and reclaim qualified leads.

This Q&A walks through fundamentals, misconceptions, tactical implementation, advanced techniques, and future implications. The approach is data-first, skeptically optimistic, and focused on experiments and proof rather than fear-based hypotheses.

1) Introduction — common questions you’ll want answered

Stakeholders typically ask:

- “If rankings are stable, why are sessions dropping?” “Why do competitors with worse SEO scores get our leads?” “How do we see what AI models say about our brand?” “How can I prove attribution and ROI so budget isn’t cut?”

Short takeaway: ranking position is only one input to traffic and lead flow. SERP composition, AI answer boxes, query intent shifts, CTR behavior, and on-site conversion are equally decisive. Below we unpack each item and give practical diagnostics, step-by-step implementation, and experiment templates for proof.

2) Question 1: Fundamental concept — Why traffic falls despite stable rankings

Core idea: “Rank” is not traffic. Think of ranking as a street address and traffic as footfall in the storefront. The address is the same, but if a new shopping mall opens next door (AI overviews, featured snippets, marketplace results), fewer people walk into your store even though your address hasn’t changed.

Key reasons this happens:

- SERP feature displacement — featured snippets, People Also Ask, AI overviews, image packs reduce CTR to organic links. Query intent shift — keywords staying the same can have evolving intent (research → transaction), and your page may not match the new intent. Loss of branded demand — competitors’ branding or marketplace listings can capture direct clicks and queries that used to land on you. Personalization and localization — a subset of users get different results, reducing aggregate sessions even with stable average positions. Seasonality and sampling noise — GSC sampling and day-to-day variance hide true user-behavior trends.

Practical diagnostics (quick checklist):

- Compare position vs. clicks and CTR trends in GSC per query and per SERP feature. Inspect change in SERP features (use tools: SISTRIX, SEMrush, Ahrefs, or automated SERP scraping) across time windows. Segment sessions by query intent and landing page — did intent composition change? Review server logs to see referral patterns and large drops for specific query/UA cohorts.

Example

Query: “best budget CRM 2025” — position stable at #3, clicks down 40%. SERP analysis shows a new “AI overview” with a comparison table and a sponsored marketplace card above results. Conclusion: displacement by SERP features explains CTR loss even though rank didn’t change.

3) Question 2: Common misconception — “If my competitor has a lower SEO score they shouldn't win”

Misconception: SEO score or technical audit percent represents likelihood to convert. Reality: conversion and qualified lead capture depend on intent alignment, brand signals, on-page CTAs, trust markers, and where traffic is coming from.

Factors that let “worse SEO” competitors win:

- Stronger brand or product-market fit for the query (brand searches, repeat visitors). Better placement in SERP features (product cards, local packs) that capture high-intent clicks. Higher-quality backlinks in topical clusters — quantity isn't everything. Superior landing-page optimization (clear value proposition, form friction, tailored flows). Paid channels or partnerships driving targeted traffic that converts better.

Analogy: a poorly painted but well-signed store with a clear “sale” sign will outsell a shiny shop with no signage. SEO score is the paint; conversion signals are the sign and the sale price.

Practical examples and checks

- Run a landing page conversion audit: measure time-to-first-byte, clarity of headline, CTA prominence, form fields, social proof placement. Compare backlink topical relevance — use Ahrefs or Majestic to score backlinks by topical trustflow. Check assisted conversions and multi-touch paths in GA4 — a competitor may be winning lower-funnel nudges via email/paid social.

4) Question 3: Implementation details — how to detect, measure, and respond

Goal: create repeatable diagnostics and experiments that produce defensible ROI statements. Below are step-by-step methods.

Step A — SERP and AI overview monitoring

- Build an automated SERP capture pipeline (daily) for target queries: record HTML, SERP features, and the top 10 results. Log snapshots to S3 and parse for featured snippets, PAAs, image packs, shopping cards, and any “AI” sections. Maintain a differential report: highlight queries where a new entity/brand appears in the AI overview.

Step B — Probing LLM answer behavior

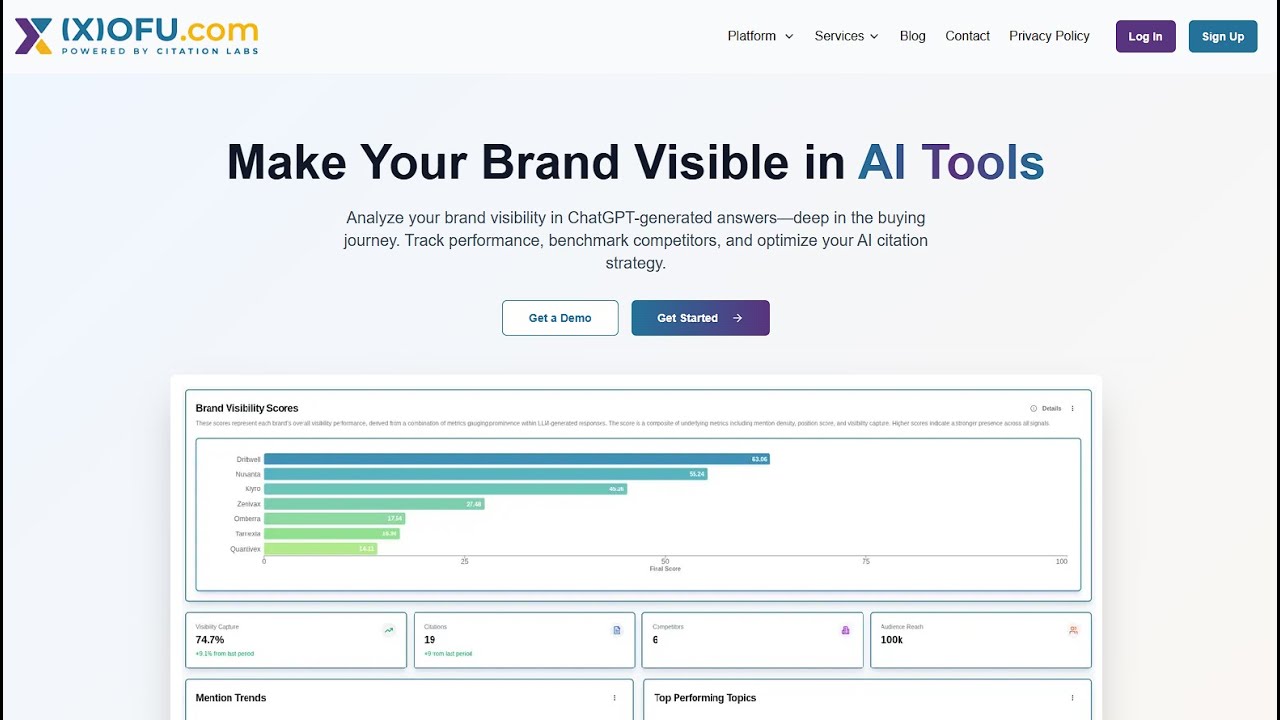

- Use model APIs (OpenAI, Anthropic, Perplexity) to run representative queries and store answers. Respect TOU for each service. Design queries that mimic real-user phrasing and intent variants (short, long-tail, question form). Extract mentions of brands and links referenced in model responses. Build frequency tables by brand and claim.

Step C — Attribution and ROI proof

Options — ordered by rigor and feasibility:

Event-level tracking and improved UTM hygiene (lowest effort). Add form-field UTM capture and hidden inputs to capture campaign/query metadata. Server-side capture of landing referrer + query string + fingerprinting for session stitching. Randomized holdout experiments: run a search ad or content variant only in a controlled geotargeted slice and measure lift in conversions vs. holdout. This produces causal lift estimates. Incrementality tests using PSA (paid search auction) or organic de-boost experiments with controlled snippet changes — requires engineering but provides strong proof.

Instrumentation checklist:

- GA4 configured for events + conversions + custom dimensions for query-source. Server logs stored and parsed into BigQuery for deterministic matching. Experiment platform (Optimizely/LaunchDarkly) for landing-page A/B and holdouts.

Example experiment

Hypothesis: Adding structured comparison tables and FAQ schema will recover conversion rate for “product comparison” queries.

Baseline: current pages to 50% of traffic. Treatment: enhanced schema, HTML comparison table, short bullets for buyer intent, and redesigned CTA for the other 50%. Run for 6 weeks, measure lift in leads per 1,000 sessions, compute p-value or Bayesian credible interval.If lift > historical variance and cost per lead improves, tie the result to ROI and request reallocation of budget from low-performing channels.

5) Question 4: Advanced considerations — entity, knowledge graph, and strategic defenses

AI overviews and modern search are entity-driven rather than just keyword-driven. Treat your brand as an entity with attributes that the Knowledge Graph can consume.

Advanced techniques:

- Schema-first strategy: publish clear entity pages (About, Product, Team) with rich schema (Organization, Product, Person, FAQPage, HowTo, Review). Use canonical URLs and consistent NAP (name, address, phone). Structured data monitoring: daily checks for schema errors and data drift. Use a CI job to validate JSON-LD outputs. Entity backlinks: acquire mentions in high-authority, topically relevant publisher pages that use explicit labels for your brand (e.g., “BrandX is a financial planning app”). Direct answer optimization: create short, authoritative answer snippets and FAQ blocks for the queries that surface in AI overviews. Think like a knowledge graph editor. Conversational content: produce explainer pages formatted as short Q&As and bulleted lists — these are more likely to be summarized by LLMs in an overview.

Analogy: if the web is a map, schema and entity work are the GPS metadata that tells navigation systems (and LLMs) which building is yours and what it sells.

Table — Quick comparison of defensive tactics

Tactic Effort Expected impact Schema & entity pages Medium High for AI/knowledge-graph visibility SERP snapshot monitoring Low High diagnostic value LLM probing pipeline Medium Medium — shows brand mentions in AI outputs Randomized holdout experiments High Very high credibility for ROI6) Question 5: Future implications — what to watch and how to prepare

Trends to monitor and prepare for:

- Increased prominence of AI-generated “overview” answers that reduce organic CTR for informational queries. Greater reliance on entity- and knowledge-graph signals rather than raw on-page signals. Privacy-driven attribution changes that favor modeling and experiments over deterministic last-click measurement. Rise of multi-modal answers (images, tables) — your content must be structured and multi-format ready.

Concrete preparedness steps:

Invest in first-party data: capture leads, emails, product usage events. This reduces dependence on third-party signals. Operationalize regular incrementality testing and holdouts as part of the quarterly roadmap. Train content teams to write “answerable” content—short, factual, with citation links and structured tables—so LLMs cite you. Partner with PR to build authoritative mentions on publisher sites that feed knowledge graphs.Metaphor: think of the next https://faii.ai/insights/ai-seo-optimization-services-2/ five years as a shift from street signs to voice navigation. If you only painted your storefront, you’ll be invisible to voice assistants. Add metadata and authoritative citations so you show up in spoken directions.

Final checklist to take action this quarter

- Deploy daily SERP + AI overview monitoring for top 200 queries. Add schema-rich entity pages and validate JSON-LD with automated tests. Build an LLM probing pipeline to capture model outputs for target queries (store outputs for audits). Run two randomized holdout experiments for high-value landing pages to measure lift. Improve UTM capture and server-side session stitching for stronger attribution.

Closing note: the problem is not mysterious — it's multi-factor. Stable rankings with declining traffic signals a change in the distribution of clicks and intent rather than a single SEO fault. The solution is instrumented experimentation, entity-focused content, and a defensible attribution strategy. Those steps turn an emergent risk (AI overviews and changed SERPs) into measurable experiments that prove ROI and protect marketing budgets.

If you want, I can:

- Draft a 6-week experiment plan with sample queries, success metrics, and instrumentation steps. Create an example SERP capture script and parsing schema you can hand to engineering. Outline an LLM probing prompt set for your top 50 branded and non-branded queries.